With the growing sophistication of technologies like Artificial Intelligence, Machine Learning and the usage of Data Science algorithms, the process of computational decision-making is getting more automated with emphasis on the use of a trained system to generate valuable outcomes.

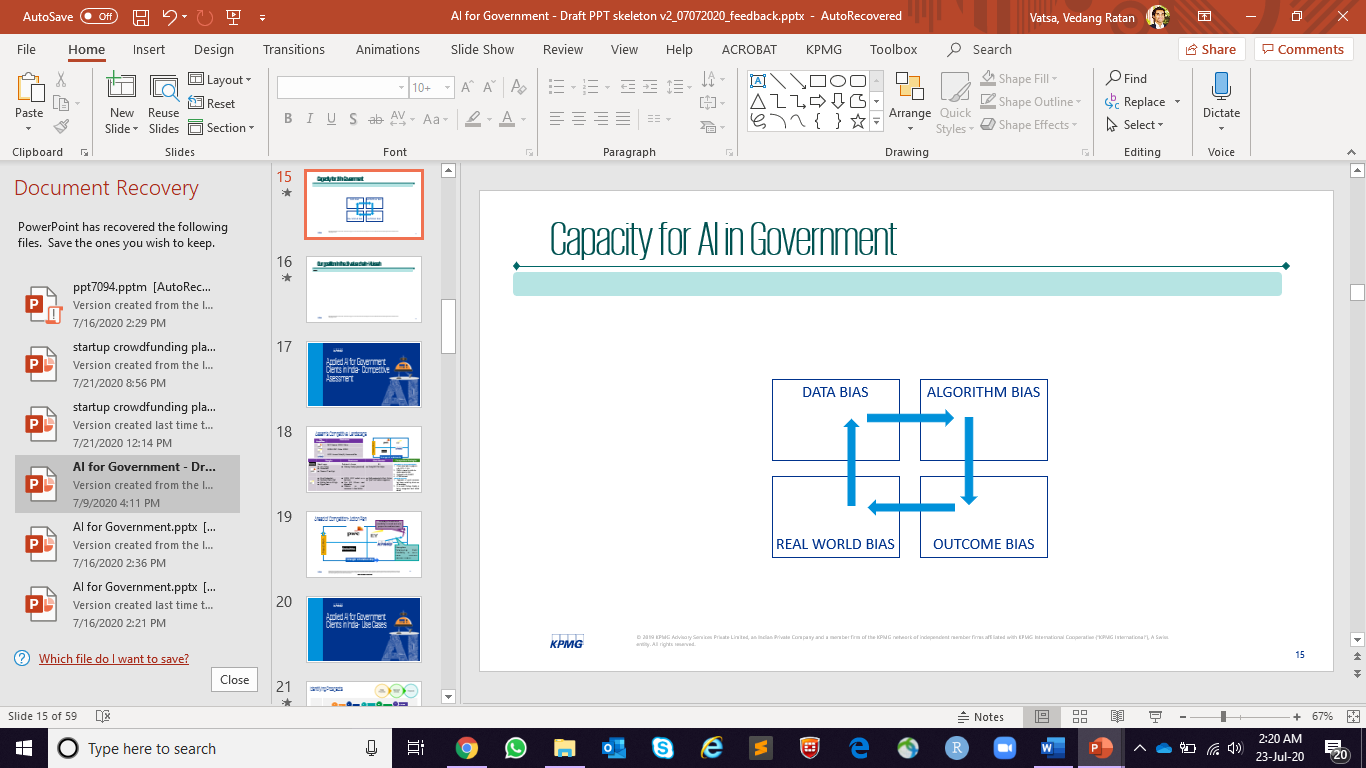

Considering how the mechanism of any computer-based decision-making process takes the order, there can be a possibility of bias in the output of such systems, which may become a cause for bias in the real world.

Technological advancements in the last few decades have made us more dependent on computer systems now more than ever. These technologies help to automate decision-making at multiple levels -- be it on social media, online shopping, search engines, voting behaviour, and other things too numerous to mention. While this makes our life simpler on many fronts and has proved to be very useful, but in hindsight computer scientists have noticed that there are some negative impacts linked to it as well.

Algorithmic-bias is the systematic and repeatable errors in the computational outcomes that create a lack of fairness in the process. In today’s scenario, algorithms are ubiquitous and play a dominant role in our daily life.

Many of our decisions get influenced by algorithms encompassing digital systems, making algorithmic-bias a critical issue to ponder on. The algorithms often get trained on data sets, and very often, these data sets aren’t even adequately labelled. An algorithm will become better at a task if it gets trained with more data. But this training data is often produced with less accuracy, creating a primary base for the bias to take form.

For any estimation process to conclude the impact of possible bias or error, it is important to ponder upon the following questions, to deeply understand and estimate the impact of the underlined bias in any system:

- Who are the beneficiaries of the final decisions, and how can they be affected by any biased result?

- How was the training data chosen to develop the system?

- How can the algorithm be tested for various forms of inputs?

- What are the methods to ensure the inclusion of diversity in the design and implementation phase?

- How can a specific set of outputs offer gain to the stakeholders concerned?

- What interventions may be required to restrict the impact of bias in the result?

- What can be the level of openness that the design for an algorithm may incorporate?

How can algorithms be biased?

To deeply examine the aspect of biases in algorithms, it is essential to study the input, the code, and the context. Biases may arise in any of these components. Bias in any algorithm may be due to the type of input datasets to any machine learning algorithm that may enable it to learn the patterns and generate outcomes based on those patterns.

Any policy literature using male pronouns may make the system learn that the associated elements are relevant or applicable to males (only), creating a base for the gender bias to emerge within the system.

Another form of bias can be due to the code that enables a program to learn from the input datasets and deliver output after applying the set logic. A lack of diversity among the software development teams may allow a program to think as per the understanding and logic implementation that may not be exhaustive, creating a potential for the system to produce results that may not incorporate all facets of the expected varieties.

The third form of bias includes bias in the context, which is when the input data meets the written code for a program. A significant aspect of a real-world digital product is the business model that drives the financial part of the overall business. Biases due to the motivation to bring out any specific form of output may pave the way for bias to creep into the algorithm.

An example of this would be news platforms that, to maximise their profits, may focus on a specific subject, wittingly or unwittingly, thus providing less focus on potentially another primary issue or incident.

There is an immediate need to stimulate discussions around the technological aspect of governance and facilitate policy development, promoting fair and ethical practices in the development of digital products for social good, especially in the context of Artificial Intelligence.

What can we do?

Ensuring a check on the algorithmic bias include the practice of upgradation of numerous laws to include the digital processes and procedures, including the ones that derive data from the data-based digital systems.

With the advancement of digital service delivery modules, it is pivotal to have a regulatory check in place, maybe in the form of an auditing infrastructure for digital solutions. The implementation of sandboxes to encourage anti-bias implementation of algorithms may further facilitate and detect the sensitivity of bias.

It is important to have guidelines in the form of anti-bias self-regulatory practices for technology organisations. Implementation of sandboxes is already encouraging innovation for the companies in the financial domain that are keen on leveraging the technological aspect of innovation and service delivery.

In the advancement towards digital inclusion, digital exclusion may come out as a new form of vulnerability. Another aspect can be about the introduction of Corporate Digital Responsibilities in the way of ethical digital practices to ensure that technology implementation may follow a balanced trade-off between profitability (direct or indirect) and usability (experience, design and privacy).

While it is essential to emphasise the practices to curb the stated bias by any state, it is imperative to make the users more literate about the algorithms embedded within the digital products, especially around the essential services.

It is important to define the ethics of the usage of advanced technologies such as Machine Learning and Artificial Intelligence in the usage of various processes and implementations as in the Internet of Things, so that a holistic growth may cover large parts of the society.

The development of algorithms needs to consider diversity, starting from the development team and the input data. There may be cases when the effect of any bias may bring a minimal change in the overall process and output. Still, the aspect of user quantity may distort the overall efficiency of the diversity-by-design principle.

An independent review of the said facet may further facilitate the effectiveness of the comprehensive system. A formal audit of the algorithms by any independent entity may check for the bias both from the view of input data and output decision.

With the continued emphasis on digital transformation, facilitated by the ongoing pandemic, the adoption of digital processes is very likely to see high growth. According to Gartner, by 2024, 75% of large enterprises will be using at least four low-code development tools for both IT application development and citizen development initiatives.

It also says that by 2021, over 75% of midsize and large organisations will have adopted a multi-cloud and/or hybrid IT strategy. While the continued innovation in the digital products may facilitate the lives of millions, it is, however, essential to ensure that it may not become a cause of digital exclusion instead of inclusion.

(Disclaimer: The views and opinions expressed in this article are those of the author and do not necessarily reflect the views of YourStory.)

India’s most prolific entrepreneurship conference TechSparks is back! With it comes an opportunity for early-stage startups to scale and succeed. Apply for Tech30 and get a chance to get funding of up to Rs 50 lakh and pitch to top investors live online.

Link : https://yourstory.com/2020/10/bias-free-algorithm-artificial-intelligence

Author :- Vedang Vatsa ( )

October 05, 2020 at 02:50PM

YourStory